AI Model Governance is essential for building trust, transparency, and compliance in intelligent systems. Learn how organizations use AI governance to manage risk, improve explainability, ensure regulatory alignment, and create responsible, trustworthy AI at scale.

AI Model Governance: Building Trust, Transparency, and Compliance in the Age of Intelligent Systems

Introduction: The Strategic Imperative of AI Model Governance

AI Model Governance has become a critical foundation for modern enterprises as AI, automation, and intelligent systems shape decision-making across every industry. Organizations now recognize that responsible AI requires more than innovation — it demands governance frameworks that ensure transparency, compliance, fairness, and trust.

In an era where predictive analytics and machine-driven decisions influence finance, healthcare, hiring, operations, cybersecurity, and supply chains, AI Model Governance bridges the gap between technical innovation and enterprise accountability. It ensures that AI remains reliable, explainable, ethical, and aligned with global regulations.

-

Introduction

-

What Is AI Model Governance?

-

Core Principles of AI Model Governance

-

Key Components of AI Model Governance

-

Managing Bias, Drift & Model Performance

-

Responsible AI Policies & Frameworks

-

AI Model Governance Across Industries

-

Emerging Trends

-

Challenges

-

Future Outlook

-

Conclusion

As artificial intelligence (AI) and machine learning (ML) systems become deeply embedded in enterprise decision-making, ensuring that these models operate ethically, transparently, and reliably has evolved from a technical necessity to a strategic business imperative. AI model governance represents the foundation of responsible AI — a structured framework that ensures every stage of the AI lifecycle, from model design to deployment, adheres to standards of transparency, compliance, and ethical integrity.

In an era defined by automation, predictive analytics, and autonomous decision systems, AI model governance enables organizations to mitigate risks, maintain accountability, and sustain trust among customers, regulators, and stakeholders. It bridges the gap between data science innovation and enterprise responsibility — ensuring that AI remains a force for good, growth, and governance.

What Is AI Model Governance?

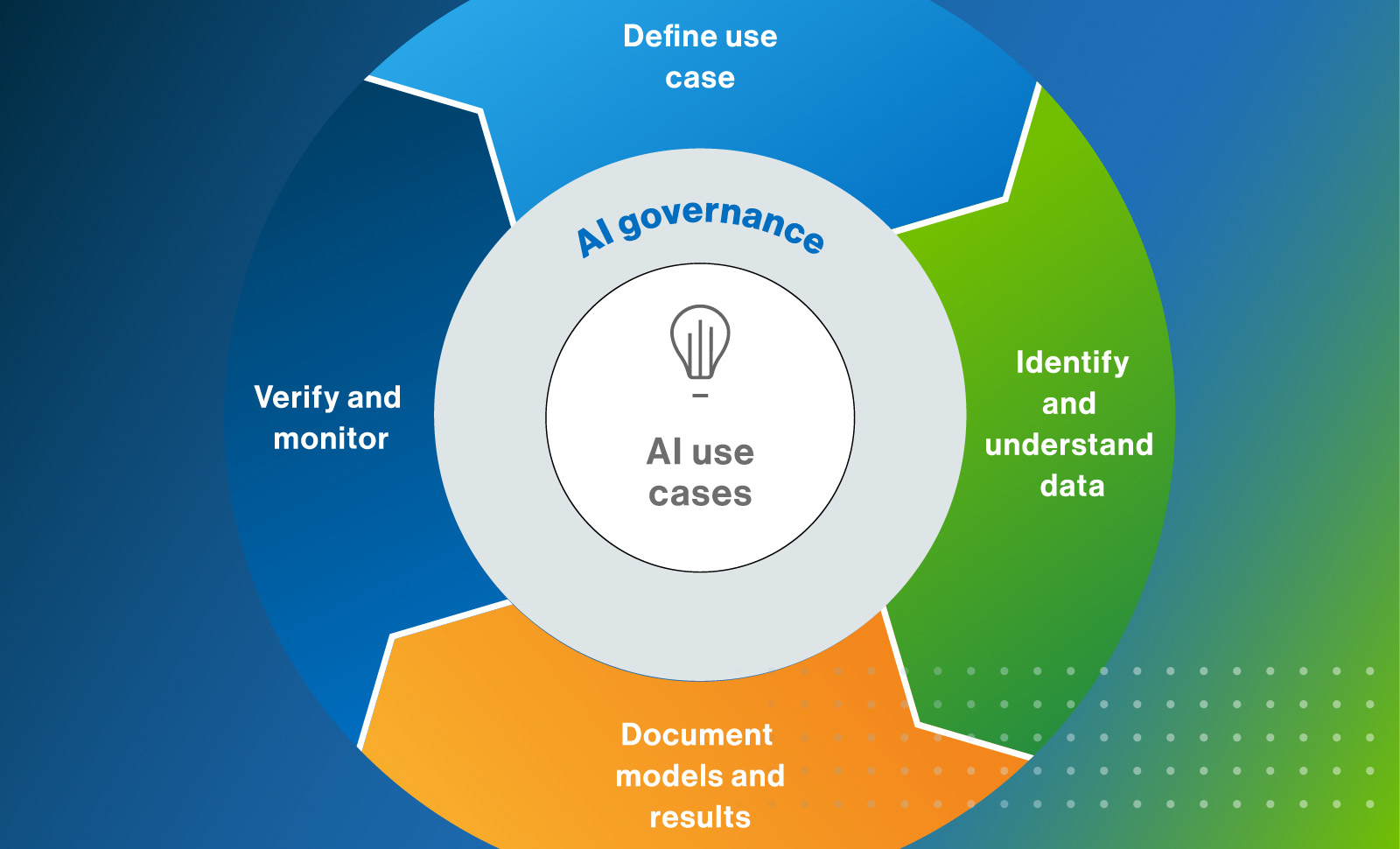

AI model governance is the systematic management of policies, processes, and tools that oversee how machine learning models are developed, deployed, and monitored. It defines how organizations ensure that AI systems are explainable, auditable, compliant, and aligned with regulatory and ethical expectations.

Just as corporate governance ensures financial transparency and accountability, AI model governance provides the framework for algorithmic transparency, fairness, and compliance. It combines elements of data governance, risk management, and technology control to ensure that AI systems are safe, interpretable, and responsible.

The Core Principles of AI Model Governance

Effective AI model governance rests upon four guiding pillars:

1. Transparency

Enterprises must understand how and why AI models make decisions. This includes model explainability, documentation of data sources, and visibility into algorithms. Transparency ensures that both internal teams and external auditors can trace the rationale behind every prediction or recommendation.

2. Accountability

Organizations must define clear ownership of AI outcomes. Whether it’s a data scientist, compliance officer, or business executive, accountability ensures that someone is responsible for the ethical and operational impact of AI-driven decisions.

3. Compliance

With global regulatory frameworks such as the EU AI Act, GDPR, and NIST AI Risk Management Framework, compliance has become non-negotiable. AI model governance ensures that data privacy, model bias, and algorithmic fairness align with legal and ethical standards.

4. Ethical Integrity

AI systems must respect human values, avoid bias, and maintain fairness across demographic groups. Ethical integrity demands continuous monitoring and calibration to ensure outcomes align with societal and organizational ethics.

Key Components of AI Model Governance

Model Risk Management

AI systems can introduce operational, reputational, and compliance risks if left unchecked. Model risk management identifies, assesses, and mitigates these risks through frameworks that evaluate model accuracy, bias, and long-term stability.

Financial institutions, for example, employ model risk management frameworks (MRMFs) to ensure that credit scoring, fraud detection, and trading algorithms adhere to both regulatory and ethical standards.

Regulatory Compliance

As AI adoption expands, governments and industry bodies are implementing AI-specific regulations.

- The EU AI Act classifies AI systems based on risk levels and enforces stringent requirements for high-risk systems.

- GDPR mandates transparency and accountability in automated decision-making.

- The U.S. NIST AI RMF provides best practices for AI reliability and governance.

Organizations that proactively align with these frameworks gain a competitive advantage by demonstrating ethical stewardship and readiness for future compliance demands.

Data Governance

Data forms the foundation of every AI model. Poor data governance can lead to biased predictions, compliance breaches, and reputational damage. Effective AI governance integrates data lineage, quality control, and access management, ensuring that the data feeding AI models remains secure, accurate, and representative.

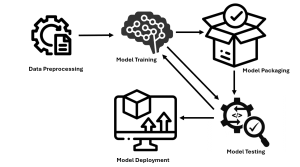

Version Control and Model Lifecycle Management

Just as software versions are tracked, machine learning models require version control to document iterations, parameters, and datasets used during training. This enables traceability, reproducibility, and accountability throughout the AI lifecycle — from experimentation to production deployment.

Model Explainability

One of the most critical aspects of governance is the ability to explain AI-driven decisions in a human-understandable way. Explainable AI (XAI) frameworks provide tools and techniques to interpret how input data leads to specific outputs, reducing the “black box” effect and ensuring compliance with explainability requirements.

Managing Bias, Drift, and Model Performance

Bias Detection and Mitigation

Bias can infiltrate AI models through imbalanced data, flawed assumptions, or poorly defined objectives. Governance frameworks incorporate bias detection tools, fairness audits, and diversity in training datasets to minimize these risks. For example, a healthcare diagnostic model must ensure equal accuracy across gender, ethnicity, and age groups.

Model Drift Management

AI models are not static; they evolve as new data emerges. Model drift — the gradual degradation of accuracy due to environmental changes — is one of the most overlooked risks in production AI. Governance processes establish continuous monitoring pipelines that detect drift and trigger retraining to preserve performance.

Performance Monitoring and Validation

Robust governance mandates regular model validation to confirm that performance metrics align with business objectives. This includes stress testing, scenario simulation, and real-world validation to ensure models remain relevant and reliable over time.

Establishing Responsible AI Policies and Frameworks

Enterprises leading in AI adoption have formalized Responsible AI (RAI) frameworks that codify principles of fairness, privacy, accountability, and transparency.

Key Practices for Responsible AI Implementation:

- Ethics Boards & AI Councils: Establish multidisciplinary governance boards to oversee ethical AI usage.

- Model Documentation (Model Cards): Maintain comprehensive records detailing model purpose, limitations, and performance.

- Audit Trails: Ensure traceability of all AI decisions for compliance and accountability.

- Employee Training: Educate data scientists, engineers, and decision-makers on ethical and regulatory considerations.

Case Example: Financial Sector

Global banks such as J.P. Morgan and HSBC have implemented model governance frameworks that combine technical validation with ethical oversight. They use model risk committees to evaluate algorithms’ fairness, compliance, and operational impact — ensuring responsible AI deployment in high-stakes environments.

AI Model Governance Across Industries

1. Healthcare

In healthcare, AI models assist in diagnostics, drug discovery, and personalized treatment. Governance ensures that models comply with regulations like HIPAA and FDA guidelines, and remain unbiased across patient demographics. For example, AI-enabled imaging systems must undergo rigorous validation to prevent misdiagnosis or demographic skew.

2. Finance

Financial institutions rely on AI for credit scoring, fraud detection, and investment strategies. Governance frameworks ensure adherence to Basel regulations, GDPR, and anti-discrimination laws, protecting customers from algorithmic bias while ensuring transparent, explainable financial decisions.

3. Manufacturing

Manufacturers use AI to optimize supply chains, predict equipment failure, and enhance quality assurance. Model governance helps maintain data security, ensure reliability in predictive maintenance, and guarantee compliance with industrial safety and data protection standards.

Emerging Trends in AI Model Governance

AI Observability

AI observability extends beyond traditional monitoring to provide holistic visibility into model performance, data pipelines, and system health. It empowers organizations to proactively detect anomalies, data drifts, and bias in real time, ensuring continuous reliability.

Continuous Validation

Static model validation is no longer sufficient. Enterprises are adopting continuous validation loops that automate performance checks, retraining triggers, and compliance audits — creating a living governance ecosystem that evolves alongside the AI model.

Responsible AI and Ethical Auditing

As AI ethics becomes central to public trust, responsible AI initiatives now integrate third-party audits, ethical scoring, and algorithmic transparency reports to ensure unbiased, human-centered outcomes.

Automated Compliance Tooling

Modern platforms are introducing AI-driven compliance systems that automate regulatory checks, generate audit reports, and maintain policy adherence. These tools reduce human error and streamline governance across complex AI ecosystems.

Challenges in Implementing AI Model Governance

Despite its strategic importance, AI model governance presents challenges:

- Lack of Standardization: Global variations in AI laws create fragmented compliance landscapes.

- Complexity of Explainability: Deep learning models, especially large neural networks, remain difficult to interpret.

- Data Privacy Concerns: Balancing transparency with confidentiality remains an ongoing dilemma.

- Cultural Resistance: Aligning cross-functional teams — data science, legal, and business — under a unified governance framework can be challenging.

Forward-looking enterprises overcome these challenges by integrating MLOps, governance automation, and ethical leadership into their AI ecosystem.

Future Outlook: Governance as the Cornerstone of Trustworthy AI

As AI becomes the foundation of digital transformation, governance will define which organizations thrive in the next decade of intelligent automation. Enterprises that integrate AI model governance into their data and operational DNA will not only ensure compliance but also earn a sustainable competitive edge built on trust, transparency, and accountability.

In the coming years, expect to see governance frameworks evolve from manual oversight to self-regulating, AI-powered governance ecosystems capable of enforcing compliance, optimizing performance, and ensuring ethics at scale.

Conclusion

AI model governance is not just a regulatory requirement — it’s the strategic backbone of responsible digital transformation. By embedding governance across every stage of the AI lifecycle, organizations create systems that are not only innovative but also ethical, explainable, and compliant.