Discover how AI Model Lifecycle Management (MLLM) transforms enterprise AI into a continuously learning, governed, and self-optimizing intelligence engine — ensuring trust, compliance, and scalable performance.

Introduction: From Model Chaos to Intelligent Continuity

AI has matured beyond experimentation. Enterprises now require AI Model Lifecycle Management to sustain intelligence, prevent model decay, and ensure compliance across evolving data and regulatory landscapes

-

Introduction: From Model Chaos to Intelligent Continuity

-

The Essence of AI Model Lifecycle Management

-

Why Model Lifecycle Management Is Now a Boardroom Conversation

-

The Lifecycle: From Data Genesis to Intelligent Renewal

4.1 Data Engineering & Feature Discovery

4.2 Model Development & Experimentation

4.3 Validation, Testing, and Fairness Checks

4.4 Deployment & Integration

4.5 Continuous Monitoring & Drift Detection

4.6 Retraining & Reinforcement

4.7 Governance & Compliance -

Architecting the MLLM Ecosystem: From Frameworks to Intelligence

-

MLOps: The Operational Backbone of Lifecycle Management

-

Governance and Responsible AI: Trust as the Core KPI

-

The Business Value: Turning Lifecycle Discipline into Competitive Advantage

-

Challenges on the Path to Maturity

-

Emerging Horizons: The Future of Lifecycle Intelligence

-

Implementation Blueprint: How to Operationalize MLLM in the Enterprise

-

Case in Point: Lifecycle Intelligence in Action

-

Conclusion: Building the Self-Evolving Enterprise

-

Key Takeaways

Artificial Intelligence has matured beyond experimentation.

Across the enterprise world, AI is no longer a prototype living in silos — it’s a living, evolving organism embedded in every digital process, every decision layer, and every customer experience.

But as organizations scale their AI operations, they face a fundamental challenge: how to sustain intelligence.

Models decay. Data drifts. Regulations evolve. What once delivered insight begins to misfire under new conditions. The solution is not more models — it’s model lifecycle mastery.

This is where AI Model Lifecycle Management (MLLM) becomes the silent architecture of intelligent transformation.

It’s not merely about deploying models — it’s about orchestrating intelligence as a continuously learning system, balancing automation, governance, and evolution.

In this new paradigm, enterprises don’t just use AI — they govern, optimize, and extend it with precision and trust.

The Essence of AI Model Lifecycle Management

AI Model Lifecycle Management (MLLM) is the end-to-end discipline of designing, deploying, monitoring, and continuously improving AI models in production.

It brings DevOps discipline, data governance, and machine learning operations (MLOps) under a unified framework, enabling enterprises to transform AI from a project into a platform.

Where early AI systems were reactive — trained once, deployed once — modern AI ecosystems are dynamic.

They evolve through feedback loops, retraining pipelines, and intelligent governance layers that ensure every model remains compliant, explainable, and high-performing.

Think of MLLM as the central nervous system of enterprise AI — integrating models, data, infrastructure, and compliance into a synchronized flow of intelligence.

Why Model Lifecycle Management Is Now a Boardroom Conversation

Five years ago, “model management” was a data science issue.

Today, it’s a C-suite priority — impacting regulatory exposure, customer trust, and business competitiveness.

Enterprises now operate under pressures that demand a systemic approach:

- Scale: Thousands of models deployed across products, geographies, and business units.

- Risk: Rising scrutiny around AI bias, privacy, and explainability.

- Velocity: Real-time data requires real-time adaptation.

- Governance: Global compliance frameworks (EU AI Act, GDPR, ISO/IEC 23894) demand auditable AI pipelines.

In this environment, AI without lifecycle management is a liability.

But AI with MLLM becomes a differentiator — turning model governance into a source of competitive trust and innovation velocity.

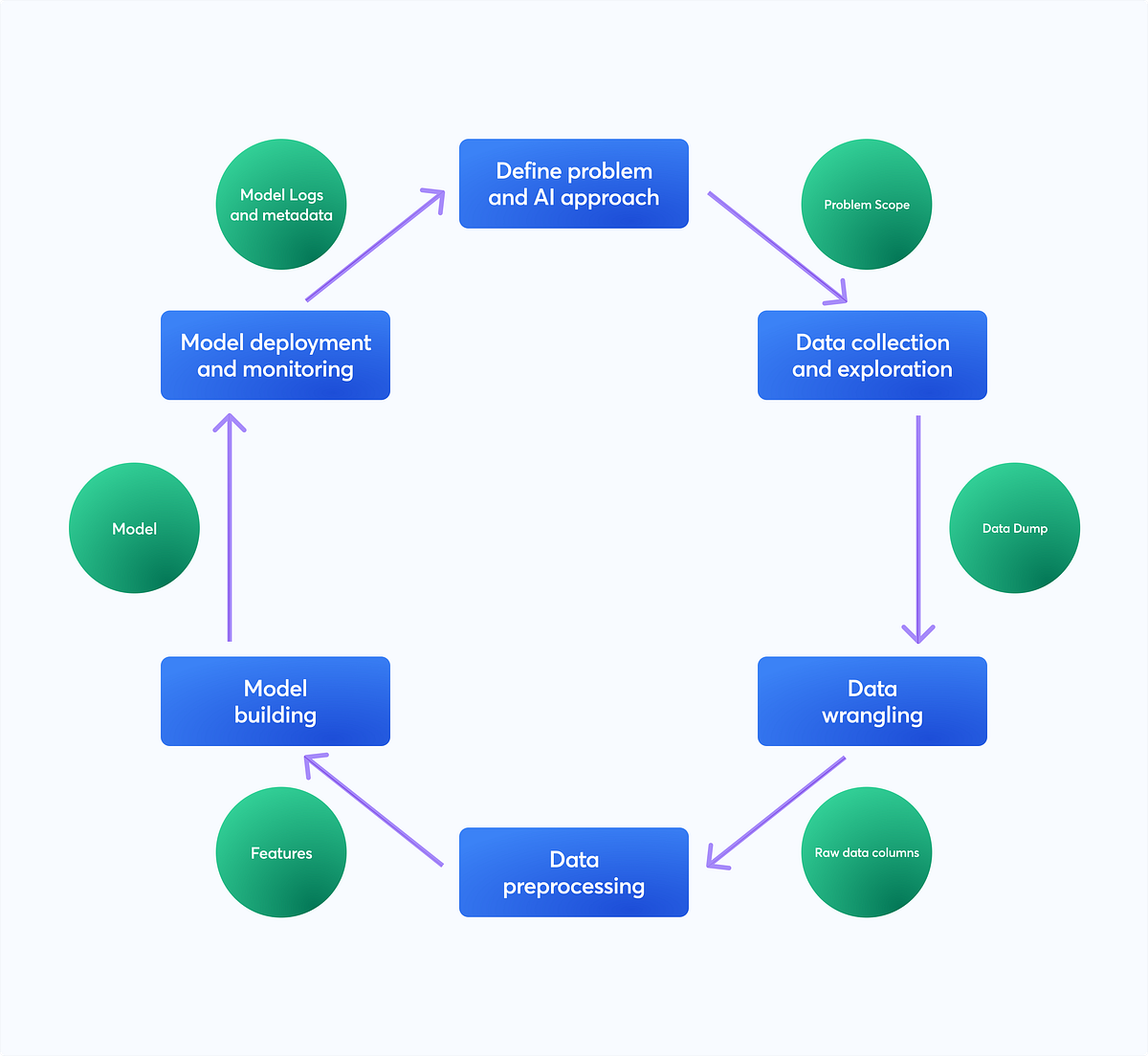

The Lifecycle: From Data Genesis to Intelligent Renewal

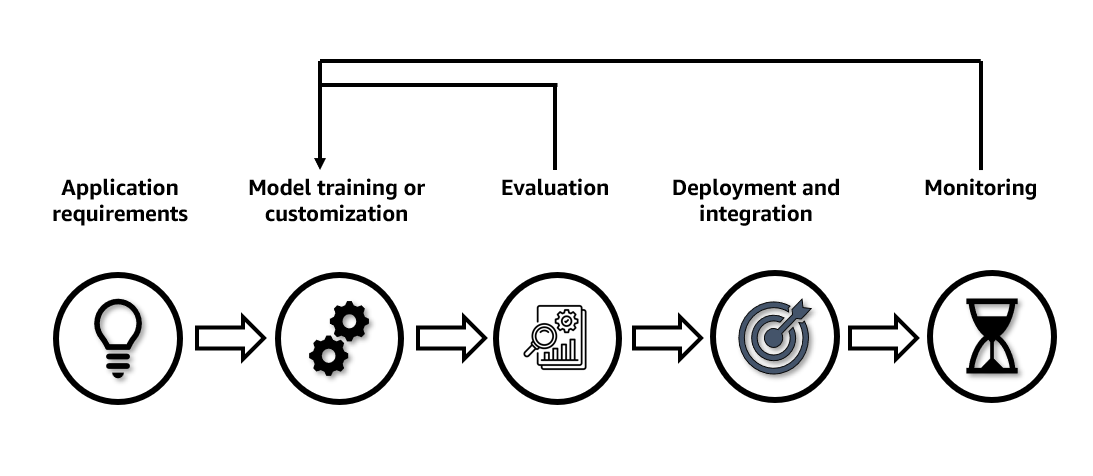

At its core, the AI model lifecycle is a continuous cycle of creation, evaluation, and evolution.

Each stage is interconnected, feeding intelligence into the next.

1. Data Engineering & Feature Discovery

The journey begins with data foundation — the quality, diversity, and traceability of training data.

Enterprises use data lakes, feature stores, and automated labeling pipelines to ensure every model is built on trustworthy, lineage-tracked data.

“Data isn’t just the raw material for AI — it’s the ethical and operational DNA of the enterprise.”

2. Model Development & Experimentation

Here, data scientists and AI engineers prototype algorithms, tune hyperparameters, and test hypotheses.

Modern MLLM platforms (like Databricks MLflow, Google Vertex AI, or Azure ML) allow for version-controlled experimentation, capturing metadata, dependencies, and metrics in real time.

3. Validation, Testing, and Fairness Checks

Before any model sees production, it undergoes rigorous evaluation — not just for accuracy, but for bias, robustness, and explainability.

MLLM integrates AI fairness frameworks (like IBM’s AIF360) to ensure compliance and ethical alignment.

4. Deployment & Integration

Deploying AI is no longer a handoff — it’s an orchestrated launch.

Containerization (Kubernetes, Docker) and CI/CD pipelines automate model rollout, A/B testing, and rollback.

This ensures models move seamlessly from lab to production with minimal friction.

5. Continuous Monitoring & Drift Detection

Once live, models are monitored like digital organisms — tracked for prediction drift, data anomalies, latency, and performance decay.

AI Ops dashboards visualize model health and trigger retraining workflows when performance dips.

6. Retraining & Reinforcement

When models encounter new realities, they learn again.

Retraining pipelines pull new data, revalidate assumptions, and redeploy optimized versions.

This loop of continuous learning transforms static systems into evolving intelligence engines.

7. Governance & Compliance

Every decision, dataset, and parameter must be auditable.

MLLM enforces policies, captures lineage, and maintains explainability — ensuring trust by design.

It’s where transparency meets traceability — and where compliance becomes code.

Architecting the MLLM Ecosystem: From Frameworks to Intelligence

A robust AI Model Lifecycle Management ecosystem is built on modular yet integrated components.

Below is an architectural blueprint common to top-tier enterprise AI environments:

| Layer | Key Components | Purpose |

| Data Foundation | Data lakehouse, feature stores, lineage systems | Reliable, governed data inputs |

| Experimentation & Development | Jupyter, MLflow, SageMaker, Vertex AI | Collaborative model creation |

| Orchestration & Automation | Kubeflow, Airflow, Jenkins | CI/CD automation and scheduling |

| Deployment Layer | APIs, microservices, containers | Scalable model delivery |

| Monitoring & Feedback | Prometheus, Evidently AI, Neptune | Real-time performance tracking |

| Governance & Security | Policy engines, explainability dashboards, audit logs | Compliance and trust |

This architecture isn’t static.

It’s designed to evolve — scaling horizontally across cloud and hybrid environments while maintaining centralized visibility and automated control.

MLOps: The Operational Backbone of Lifecycle Management

If MLLM is the philosophy, MLOps is the machinery.

It fuses DevOps discipline with machine learning pipelines, turning experimentation into continuous production.

Key Dimensions of MLOps within MLLM

- Automation: CI/CD pipelines automate deployment and retraining.

- Observability: Monitoring systems detect drift, latency, or data anomalies.

- Reproducibility: Every experiment is versioned — data, code, and configuration.

- Scalability: Distributed environments handle massive datasets and parallel training.

- Collaboration: Unified dashboards connect data scientists, engineers, and compliance teams.

Modern enterprises use MLOps not just to speed up AI delivery — but to govern AI velocity without losing integrity.

Governance and Responsible AI: Trust as the Core KPI

In enterprise AI, performance isn’t the only metric — trust is.

Governance ensures AI models remain ethical, explainable, and accountable.

1. Explainability

Every model decision must be interpretable to both data scientists and regulators.

Techniques like LIME, SHAP, and counterfactual explanations translate opaque algorithms into transparent reasoning.

2. Fairness & Bias Mitigation

MLLM integrates bias detection during validation, ensuring fairness across demographic groups.

By embedding fairness audits in pipelines, enterprises move from reactive ethics to proactive responsibility.

3. Compliance & Auditability

Regulations such as the EU AI Act require traceability.

MLLM systems automatically log:

- Dataset versions

- Model lineage

- Parameter configurations

- Decision records

This creates a chain of trust — making AI not only powerful but legally and ethically sound.

The Business Value: Turning Lifecycle Discipline into Competitive Advantage

Executives often ask, “What’s the ROI of managing AI models so rigorously?”

The answer lies in velocity, visibility, and viability.

1. Accelerated Innovation

Automated retraining and CI/CD pipelines shorten the feedback loop from months to hours.

Teams can experiment, deploy, and iterate faster — without compromising quality.

2. Operational Resilience

Continuous monitoring ensures models adapt to shifting data realities — minimizing downtime and prediction errors.

3. Regulatory Confidence

Lifecycle governance ensures enterprises remain compliant even as laws evolve.

This protects against reputational and financial risk.

4. Scalable Intelligence

MLLM creates replicable, governed pipelines — enabling enterprises to scale AI use cases globally with confidence and consistency.

5. Strategic Agility

AI Model Lifecycle Management transforms enterprises into learning organizations — capable of adjusting strategy dynamically as data insights evolve.

“In a world of algorithmic competition, lifecycle mastery becomes the ultimate moat.”

Challenges on the Path to Maturity

While MLLM offers immense potential, implementation is complex.

Common Obstacles

- Fragmented Infrastructure: Models live across disconnected environments.

- Talent Silos: Data scientists, engineers, and compliance officers often operate in isolation.

- Data Drift & Model Decay: Without automated monitoring, model accuracy erodes silently.

- Governance Overload: Manual audit processes slow innovation.

Path Forward

Successful enterprises adopt federated MLLM architectures — combining centralized governance with decentralized execution.

They empower teams with autonomy while maintaining unified oversight through AI control planes.

Emerging Horizons: The Future of Lifecycle Intelligence

As enterprises evolve, so too will the nature of AI lifecycle management.

Tomorrow’s MLLM will look less like a pipeline and more like an autonomous nervous system — capable of sensing, learning, and self-optimizing in real time.

1. Autonomous Lifecycle Management

AI systems that automatically retrain, redeploy, and recalibrate themselves — no human intervention required.

2. Generative AI for Model Engineering

Using LLMs to generate optimized model architectures, code snippets, and test suites — accelerating innovation exponentially.

3. Real-Time Model Adaptation

Dynamic models that evolve with live data streams, continuously aligning with market behavior and environmental conditions.

4. Unified AI Control Planes

Enterprise-grade interfaces combining observability, governance, and orchestration — a single pane of glass for all AI operations.

5. Sustainability by Design

Energy-efficient retraining cycles, model compression, and carbon tracking will redefine responsible AI operations.

In this future, lifecycle management isn’t maintenance — it’s metabolism.

AI becomes a living, evolving asset, synchronized with business rhythm and environmental awareness.

Implementation Blueprint: How to Operationalize MLLM in the Enterprise

A pragmatic roadmap for enterprise leaders:

- Assess Maturity

- Identify gaps in your data, model, and governance workflows.

- Establish KPIs for AI trust, scalability, and performance.

- Adopt Modular Architecture

- Deploy flexible MLLM components that integrate with existing systems (CRM, ERP, analytics stacks).

- Automate the Pipeline

- Implement CI/CD for AI, integrating tools like MLflow, Kubeflow, and Airflow.

- Establish Governance Frameworks

- Embed bias testing, model explainability, and compliance checkpoints.

- Enable Continuous Monitoring

- Use AI-driven anomaly detection for drift and degradation.

- Foster Cross-Functional Collaboration

- Align data scientists, engineers, and compliance leaders through shared dashboards.

- Iterate and Evolve

- Treat the lifecycle itself as a learning system — adapting processes as data maturity grows.

Case in Point: Lifecycle Intelligence in Action

Finance

A global bank uses automated MLLM pipelines to retrain fraud detection models daily.

What used to take three months now happens overnight — increasing detection rates by 24%.

Healthcare

A diagnostics company integrates lifecycle monitoring to track AI predictions on new patient data, ensuring model precision under regulatory scrutiny.

Retail

A fashion brand employs MLLM to continuously update recommendation engines — learning from seasonal patterns and real-time demand signals.

Each use case reinforces one truth: the enterprise of the future runs on living intelligence.

Conclusion: Building the Self-Evolving Enterprise

In an era where every decision can be augmented by data, AI Model Lifecycle Management is the foundation of sustained intelligence.

It transforms AI from a set of models into a cohesive ecosystem of learning, governance, and adaptation.

It ensures every prediction, every automation, every insight remains accountable and current.

Enterprises that embrace MLLM today are not just scaling AI — they are architecting self-renewing intelligence engines capable of learning alongside their markets, their customers, and their purpose.

“AI excellence isn’t achieved in a lab — it’s sustained in lifecycle.”

Key Takeaways

- AI Model Lifecycle Management (MLLM) operationalizes AI across its full journey — from data to deployment to continuous learning.

- MLOps automation, governance, and observability are the pillars of scalable intelligence.

- MLLM ensures trust, compliance, and ethical alignment across every AI model.

- Enterprises adopting lifecycle maturity gain agility, resilience, and strategic foresight.

- The future is self-optimizing AI ecosystems — where intelligence is not built, but evolved.